MamboLanding

A self-landing UAV people detector.

Authors: S. Galaz, C. Funck, M. Diaz

[Post still under construction]

Almost every drone autonomous navigation system is today highly dpenedent from a gps system that allows to have some kind of reference point so it can have some notion auto-localization. Nevertheless, depending of this kind of sensors can be many times highly unaccurate, specially for tasks like landing on a specific point without prior map knoledge.

In this project we proposed a navigation system for a low cost drone that only requires a front monocular camera and with that, is able to detect, aproach and land in front a person.

To this end we implement a Single Shot Detector (SSD) algorithm and a trained Mobilenet CNN for vision task, and the posterior navigation algorithm based on this detections.

Vision

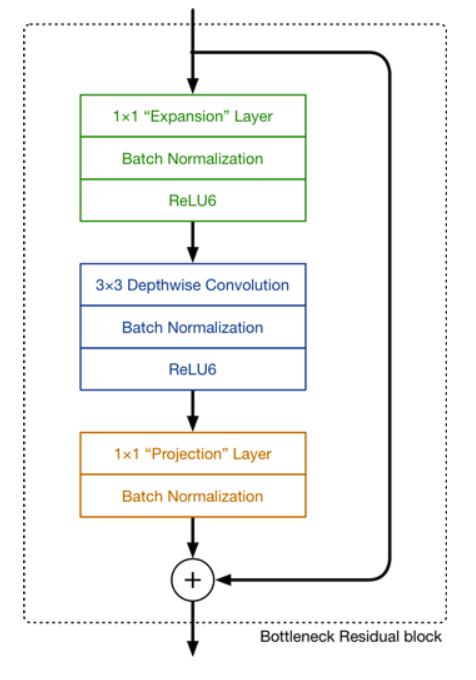

A cool thing about mobilenet is that the architecture tries to preserve the main advantage of resnets by putting a residual block allowing to avoid vanishing or exploding gradient during training, and at the same time uses only 1x1 convololutions the beginning and at the end of each 3x3 kind of layer, witch considerably reduces the number of channels and therefore, the number of parameters and inference time. The model was trained on CIFAR-10, so the network has a 20 class output.

Once we had built the mobilenet, the following step is use it to build the SSD, witch will have an output of 24 channels, 20 for the number of clases plus the boundary box (cx, cy, w, h).

With the combination of the SSD plus mobilenet, we reached an inference time of 4 miliseconds per image.

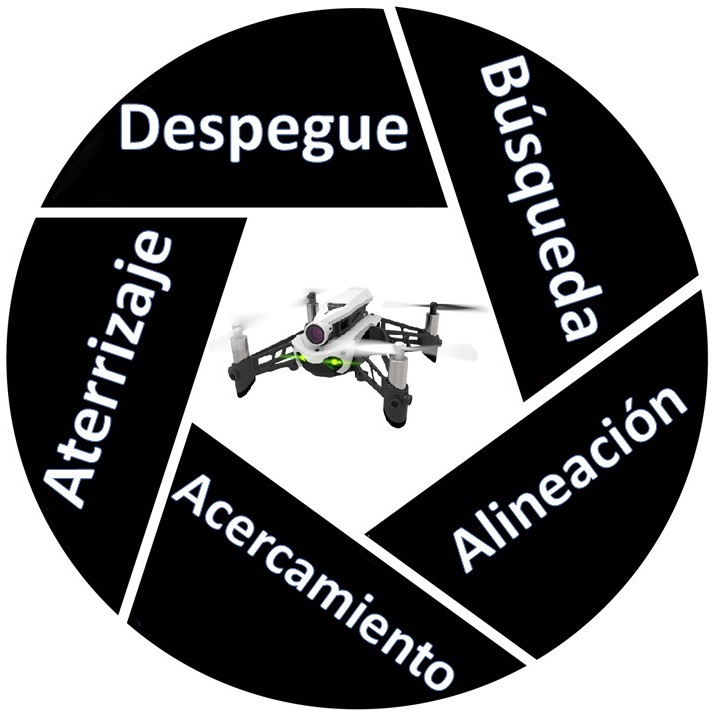

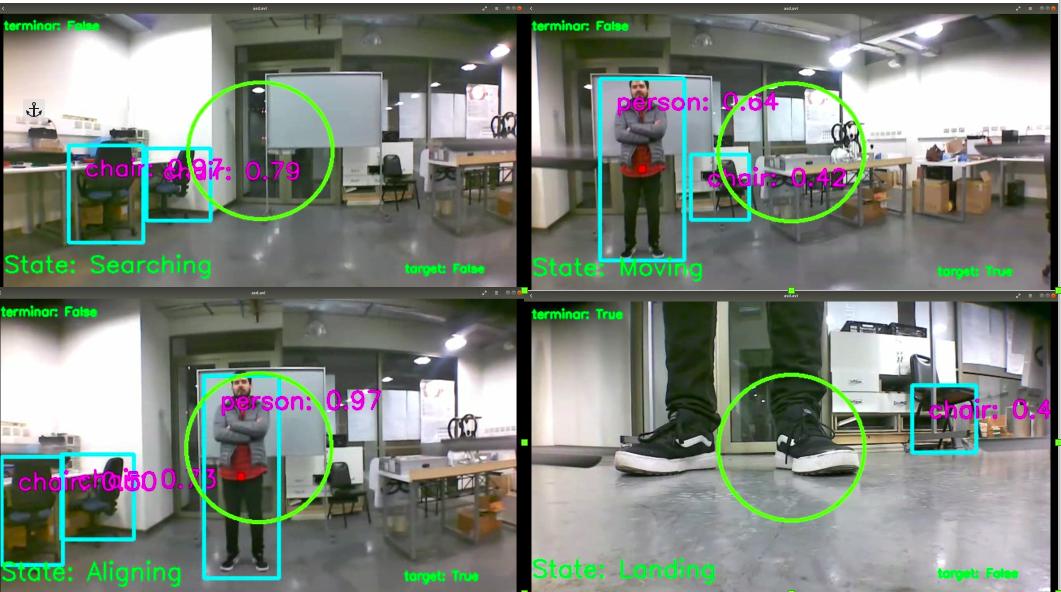

Navigation: 4 defined states

1. Searching

2. Aligning

3. Moving

4. Landing

Metrics

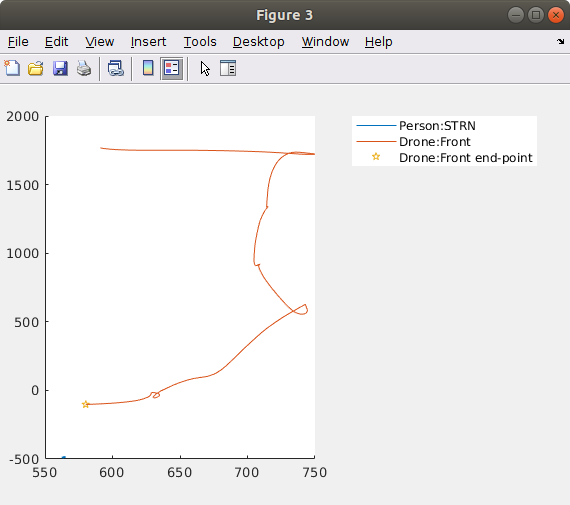

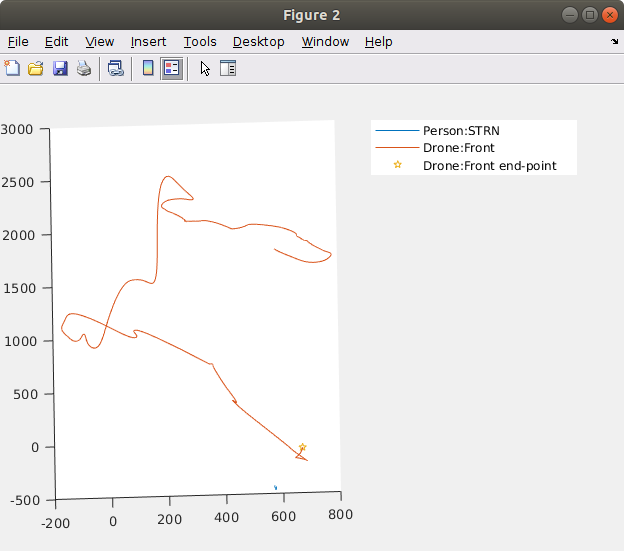

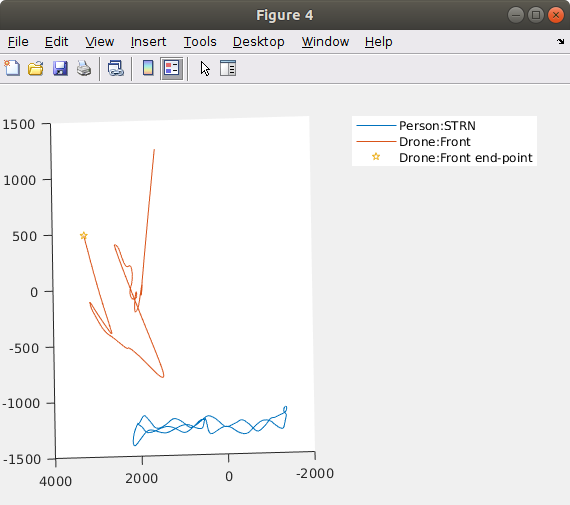

After the course final project, we took the drone to the biomechanics lab (LIBFE) to measure how well the trayectory behave under more difficult circunstances like occluded target or a dinamic kind of goal in where the target was moving. We found that in the occluded case the system is still capable of landing near the target, although it did missed the person in some parts of the simulations, wich is why we could see in the fist 2 images some non-optimal trayectories. In the dynamic case, as it can be seen from the third image, the system doesn’t respond well when the target is moving.